Scaling Clear Street’s Trade Capture System

At the heart of Clear Street’s technical machinery is our Trade Capture system, responsible for processing, clearing, and settling all incoming trades into our platform. The first version of the system was designed to support the initial, invitation-only launch of our platform. In the coming years, we expect to support 100x our initial trade volume and beyond. To support an expansion of that magnitude, we needed a fundamental redesign — one that easily adapts to our continuous growth.

Background

Let’s start with a brief summary of the post-trade workflow, to provide more context regarding the technical decisions made. What follows is by no means an exhaustive overview of the full trade lifecycle (there’s literally an entire book written on that), but we’ll give this concise explanation of the relevant parts in this blog post. (Note that for this discussion we’ve focused on the process for U.S. equities only).

Life of a Trade

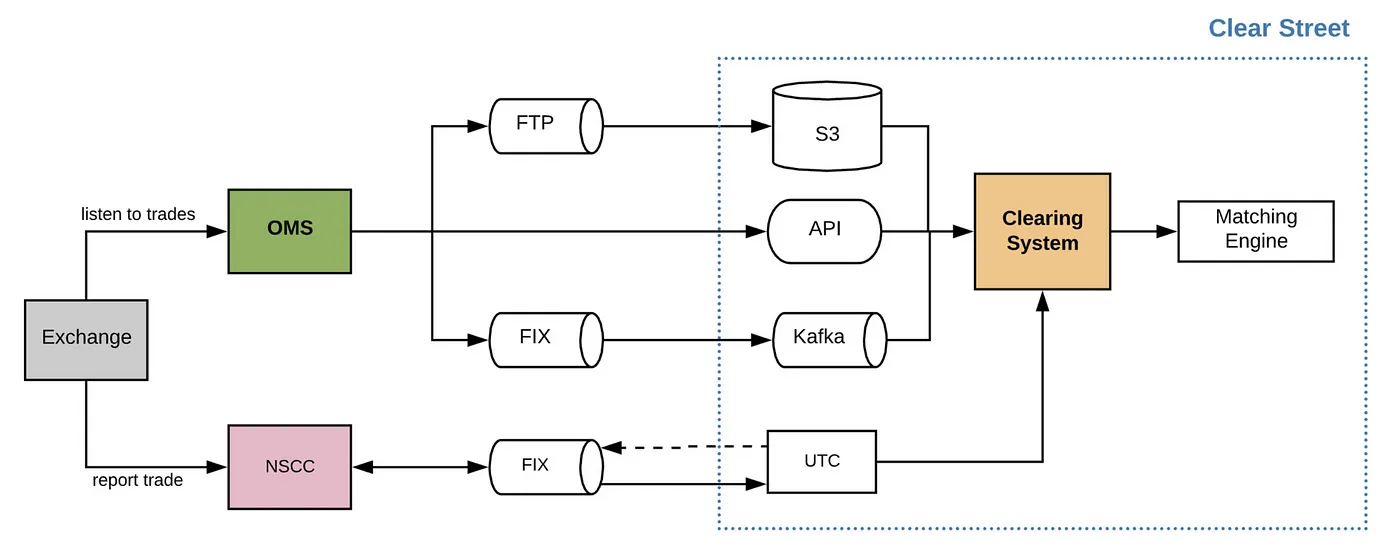

Orders initially go through an Order Management System (OMS), which is in charge of execution. Each order is then routed to an exchange or market center (e.g., NYSE) that matches orders between buyer and seller of the stock. When a match is found, the exchange informs the OMS of the successful execution of the order, at which point it becomes a trade. After that, the OMS routes the trade to a Clearing System (e.g., Clear Street) that is responsible for the clearing and settlement process.

In conjunction, trades from all market centers are routed to the NSCC (in the US), which in turn sends instructions on the trade to the Clearing System over a FIX connection through UTC protocol. A reconciliation process occurs to match house trades (from OMS) and street trades (from NSCC). After 2 days, the trade is settled (i.e., shares between the two parties involved in the trade are exchanged) through a process called continuous net settlement (CNS).

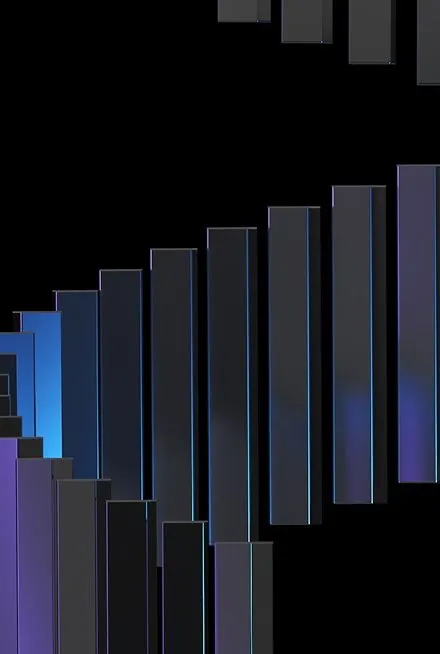

As shown above, there are 3 main ingress points for trades incoming from the Order Management System (OMS) into our clearing system:

- HTTP requests to our client-facing API endpoints

- CSV files ingested via FTP, and uploaded to AWS S3

- Trade ingestion via FIX, transformed into Kafka events

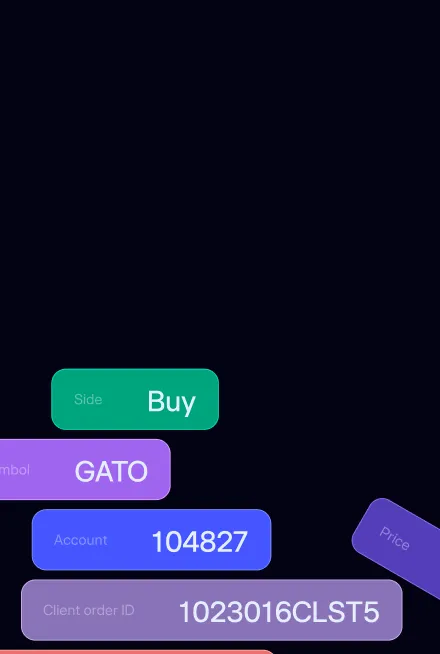

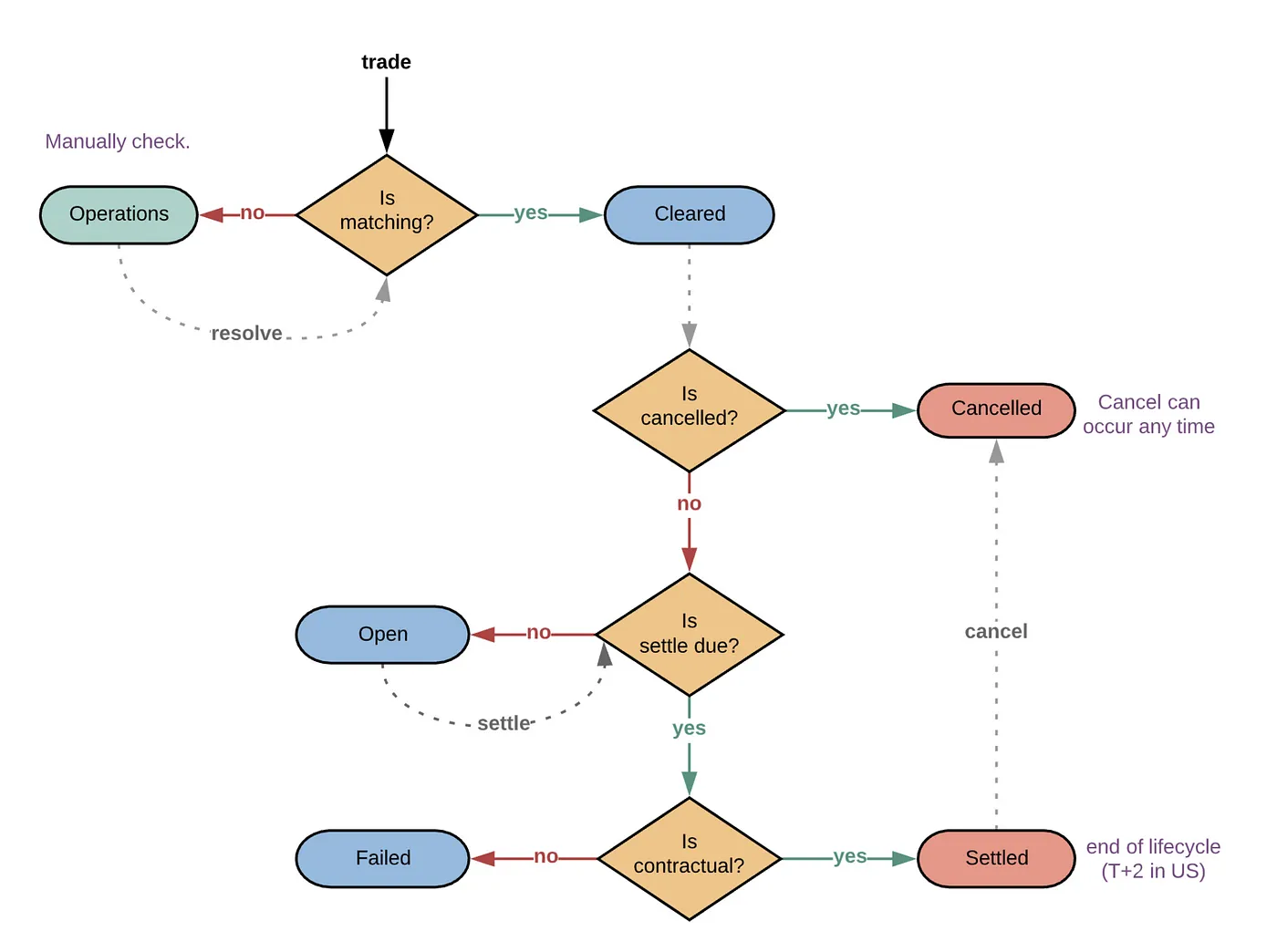

After a trade is matched and cleared, it transitions through several stages during its lifetime toward being settled. The primary states of interest are:

- Cleared: On creation, a trade’s details are verified to ensure they match with our records.

- Settled: A trade enters a settlement period, which gives the buyer and seller time to do what’s necessary to fulfill their part of the trade. This is typically 2 business days (i.e., T+2) after the trade date, as determined by the SEC.

- Failed: Trades that cannot be settled through CNS qualify for fail processing. That workflow undergoes additional stages before getting settled. (Those stages are not covered here for brevity.)

- Cancelled: A trade can be cancelled at any point within its lifecycle, even after clearing or settlement. The cancelled state is terminal.

Architecture

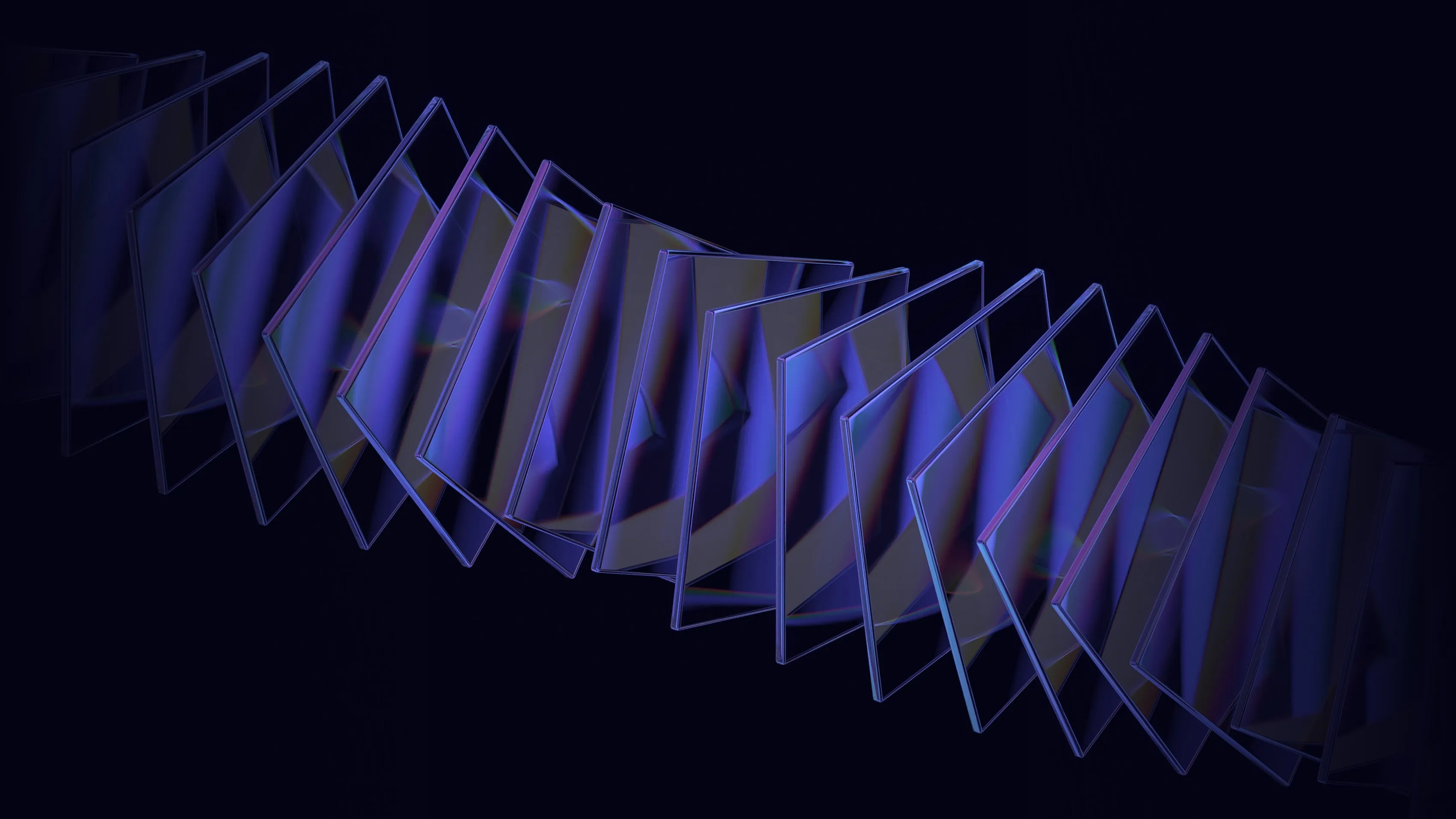

As mentioned earlier, a primary goal in our system’s new design was accommodating for high throughput traffic and enabling horizontal scale. That said, it cannot come at a compromise to consistency and integrity of behavior, since there are serious implications in certain situations. The system can be latency-tolerant, with sub-second processing time. Availability should be fairly high to ensure no prolonged downtime for our system, however we do want to gracefully handle extended periods of failures without interrupting the trade flow incoming from clients. These are some of the considerations that were in mind when we were devising the design.

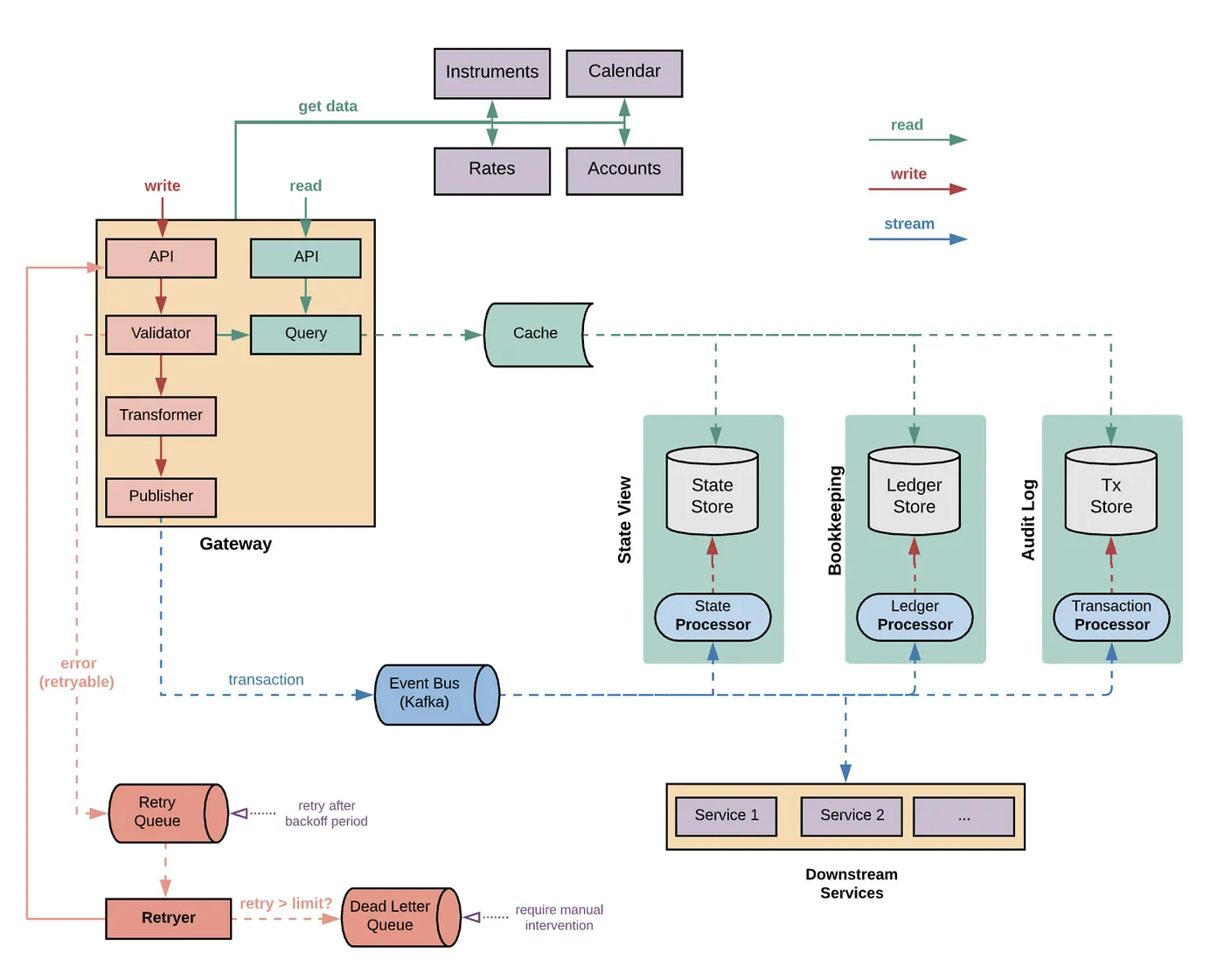

The below figure depicts a simplified high-level design of our system. We’ll drill into the main components in what follows.

Gateway

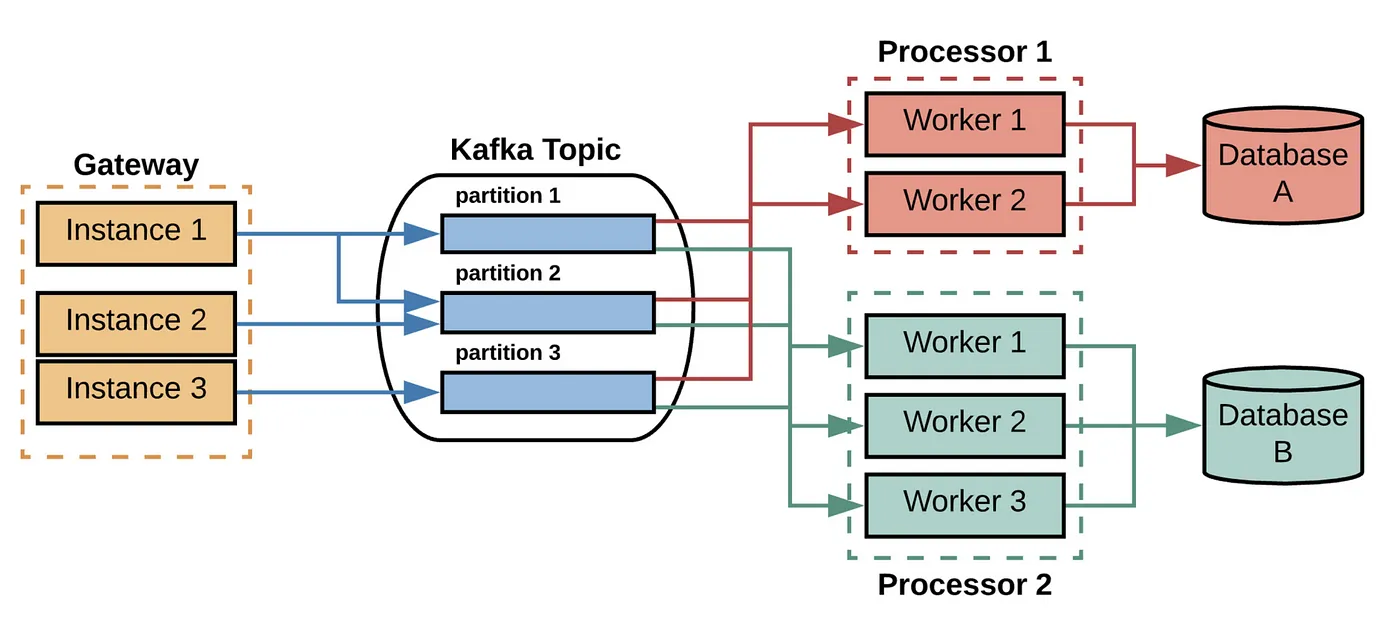

The Gateway component serves as a common API Gateway for all trade requests (read and write paths). Unifying the API interface simplifies client integration, and also effectively abstracts the remainder of the system. All necessary transformation and validation logic on receiving a trade request is performed upfront in the Gateway. This ensures, to the extent possible, that we maintain the integrity of data propagated downstream. To improve isolation, we have a different gateway role for each input type (e.g., file, stream, API), which can be scaled independently.

Event Source

After a trade request is processed, it gets transformed to a transaction (i.e., trade action) and is published to our event bus (Kafka). The design aims at leveraging event sourcing, specifically in relying on an event stream that acts as a source-of-truth for all transactions committed to our system. Persisting directly to Kafka and bypassing the database on the critical path relieves request serving from being storage-bound, and results in a considerable improvement to performance, throughput, and scalability considerations.

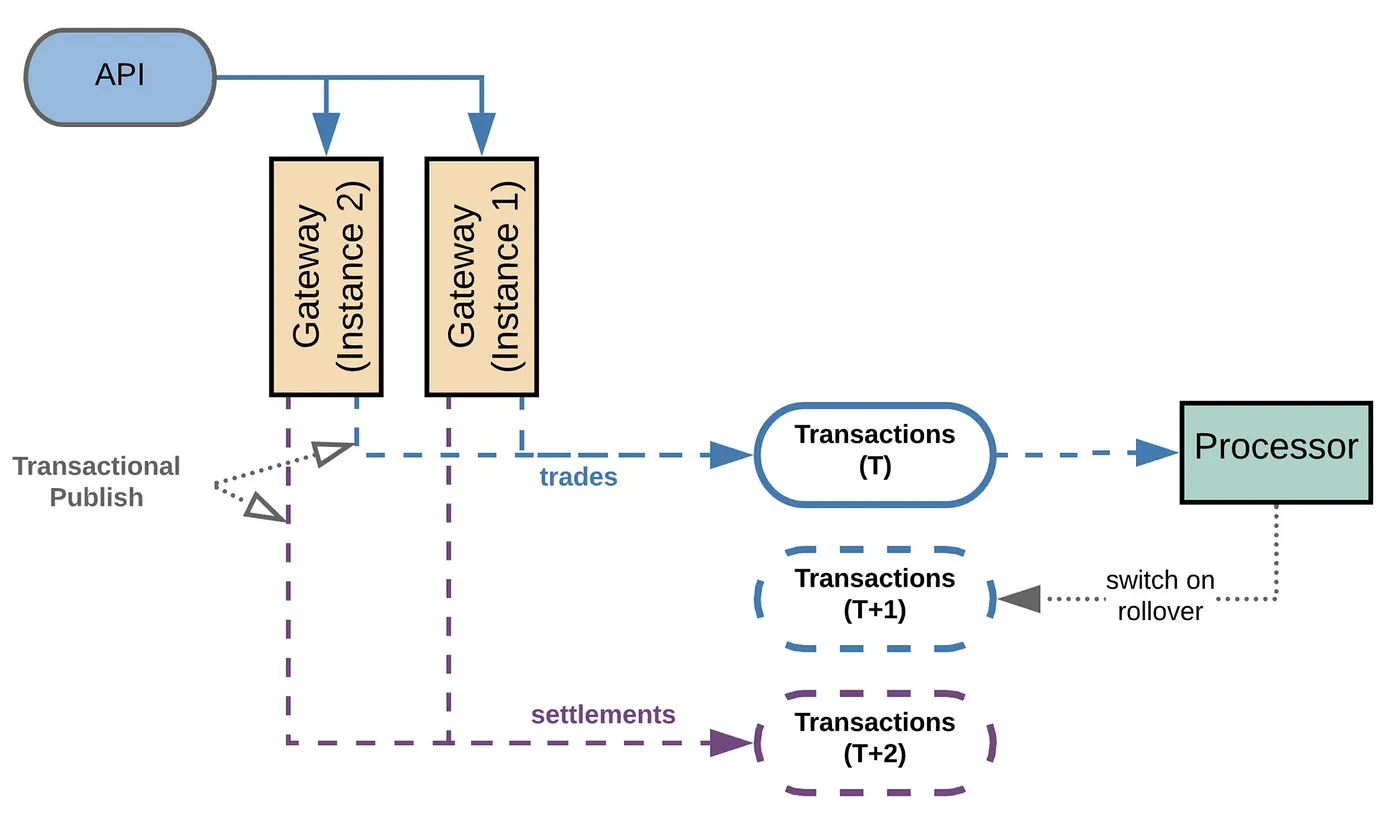

Dated Topics

Transactions are associated with an effective date (on which they are executed) that can be set for a future date. For example, a settlement transaction is created on trade creation, which is scheduled 2 days from when the trade is made. Furthermore, events are received within a set time window during the day, after which there is a cutoff to the next day. The process of transitioning to the next trading date is called “rollover.”

To address the above requirements, we decided to create a Kafka topic per date. This helps achieve a clean partition between trading activity within a day, and allows us to defer execution of transactions by inserting in a future dated topic. For requests resulting in transactions across multiple days, we leverage Kafka’s transactional producer to ensure atomicity of the operation. Lastly, the downstream consumer would switch to consume from the new date topics on rollover.

Note: Currently, the settlement cadence for incoming trades is 2 days (T+2), since we primarily operate in the US at the moment. However, the system can easily accommodate for T+N settlements where N >= 0

Processors

On the receiving end, we maintain 3 main materialized views of the transaction stream:

- State View: tracks state of all trades as presented in the trade lifecycle.

- Audit Log: stores an audit trail of transactions for historical purposes.

- Ledgers: maintains a bookkeeping view of client accounts, used for financial reporting.

We chose to segregate the processors for the different views to enable parallelization and promote separation of concerns (SoC). This also gives us flexibility in optimizing the query patterns for each view, for instance by adopting a different storage solution for each (e.g., PostgreSQL for ledgers, DynamoDB for audit trail). This lends itself to the CQRS pattern, where read and write data models can naturally diverge. Isolation also alleviates a single point-of-failure (SPoF) in the system, and allows for independent scaling.

A tradeoff with this approach is tolerance to eventual consistency; we can incur a lag in updating views as a consequence of consuming events off of a stream. Additionally, given each workflow is processed independently, there is no guarantee at a given time that all views are in sync. In our case, a small lag is tolerable since the processors are mostly independent.

Retryer

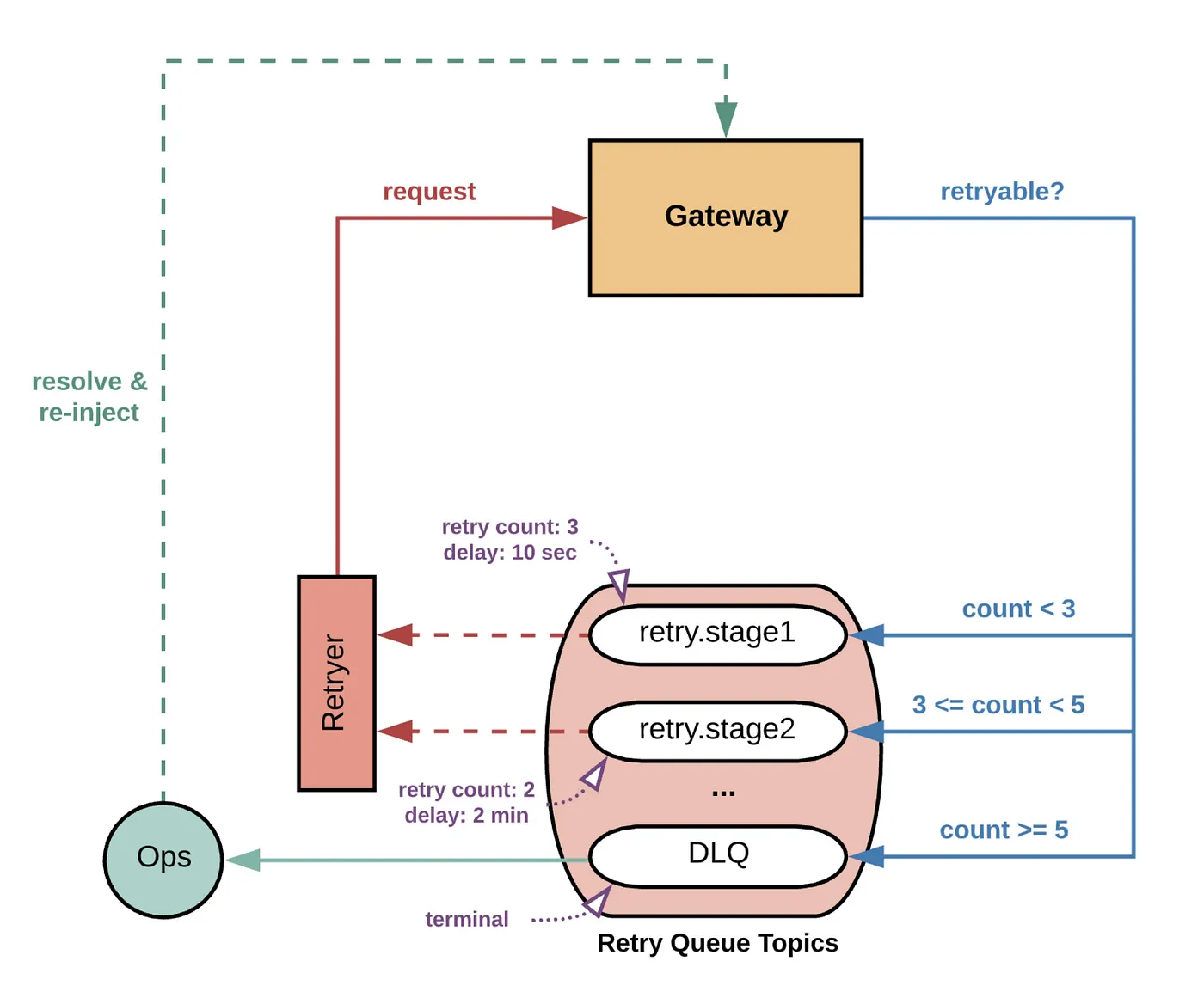

On transient failures, we want a reliable manner to retry operations without blocking execution or failing hard. Examples would be race conditions, database glitches, or connectivity issues that can naturally be resolved by inducing processing delay. The majority of failures in this category can be resolved by adding more resiliency in the app layer (e.g., request retry logic). However, some resolvable failures can be more prolonged (e.g., not being able to resolve a trade’s stock symbol). To handle these situations gracefully, we introduce the following staged retry mechanism:

- Retry Queue: Failed transactions that are retryable are added to a retry queue, scheduled for processing after an induced delay. On retry, the transaction is sent through to the gateway to be re-processed. Failures are repeatedly inserted into the retry queue up to a set retry count limit. We can also have several retry layers with varying settings as needed. We leverage SQS for our retry queues, given its FIFO semantics, exactly-once guarantee, and delayed execution features.

- Dead-Letter Queue (DLQ): Failed requests that exceed the retry limit, or require manual intervention, are added to a DLQ. The operations team is consequently notified to try and resolve the issue or notify the client of the failure. These cases are usually very rare, given most error categories are remediable.

Note: Uber adopted a similar solution, which is worth the read.

Conclusion

With the new design, we’ve considerably improved the scalability of our system. We can seamlessly adapt to traffic growth by adding more compute and/or resizing our Kafka cluster (i.e., horizontal scaling). The main unit of scale that reflects how much throughput the system can accommodate is the number of partitions per dated topics. Additionally, segregation of data persistence for each processor allows us to scale our database layer independently, or consider different storage solutions for each workflow as suitable

There were many more underlying technical challenges inherent in designing and implementing this system that weren’t portrayed here, some of which were quite interesting and unique to our problem space. We’ll shed more light on those problems and how we addressed them in future posts. Stay tuned!

Yours Truly,

Platform Engineering, Core Systems Team

Get in touch with our team

Contact usClear Street does not provide investment, legal, regulatory, tax, or compliance advice. Consult professionals in these fields to address your specific circumstances. These materials are: (i) solely an overview of Clear Street’s products and services; (ii) provided for informational purposes only; and (iii) subject to change without notice or obligation to replace any information contained therein. Products and services are offered by Clear Street LLC as a Broker Dealer member FINRA and SIPC and a Futures Commission Merchant registered with the CFTC and member of NFA. Additional information about Clear Street is available on FINRA BrokerCheck, including its Customer Relationship Summary and NFA BASIC | NFA (futures.org). Copyright © 2024 Clear Street LLC. All rights reserved. Clear Street and the Shield Logo are Registered Trademarks of Clear Street LLC