Ephemeral Dev Environments as a Service

Testing is important, especially at Clear Street. Our system processes millions of trades a day and correctness is vital in our industry. Before Ivy (a.k.a., Clear Street’s branch deploy service…more on that later) developers had two main environments where they could interactively end-to-end test their code: their local Kubernetes environment and the Dev environment. We also ran unit tests and integration tests in CI and on our local machines.

A Clear Street developer’s local Kubernetes environment, or as we like to call it, localkube, is great because of its fast feedback loop and isolation. It allows developers to quickly develop new features for their applications as well as experiment with Kubernetes configuration changes such as liveness and readiness probes.

Even with all these benefits, local interactive testing has its limits. Namely, it’s hard to collaborate with others while testing a change. This is why we also utilize our Dev environment to test our services before merging changes to the main branch. Using SLeD (Clear Street’s service level deploys tool), our developers can “beta deploy” unmerged code to our Dev environment. Once a change is beta deployed, engineers, designers, operations staff, and other stakeholders can work together to ensure the change behaves as expected.

While beta deploys are great for test collaboration, it increases contention in our Dev environment. For example, an engineer may want to beta deploy their UI changes for a designer to verify that it works as expected, but other engineers cannot beta deploy the same service until the designer is done with their testing. As our teams scaled and more engineers worked on the same service, this contention only got worse. In addition, collaborative testing in our Dev environment is hampered by instability. Someone may beta deploy a buggy version of a service that I depend on, making it much harder to test my service.

For us, the natural solution to these problems was branch deploys: the ability to deploy any branch to an isolated and ephemeral environment. Our ideal workflow was simple:

- A user requests a branch deploy for their branch.

- Once the branch deploy environment is created, users are able to access services through a static URL (e.g., web.cool-meadow-380.ivy.co.clearstreet.io for our website).

- Users can push changes to their branch which are reflected in their branch deploy.

- The branch deploy environment is torn down once it’s no longer needed.

Building Ivy

We decided to call our branch deploy system Ivy, and we set about making it a reality. In addition to providing the branch deploy workflow above, we wanted Ivy to be fast and easy to use. We didn’t want users to have to wait around for branch deploys to be created, and we also wanted non-engineers to be able to use the system so all stakeholders of a service can be part of the testing.

Our work with localkube gave us a strong foundation on which to build Ivy. With localkube, we could essentially spin up our entire stack with a few simple Tilt commands. With that, Ivy’s job became quite streamlined. All Ivy had to do was:

- Accept a request to create a branch deploy with some set of Tilt arguments.

- Provision an EC2 instance and set up DNS for a custom domain (e.g., cool-meadow-380.ivy.co.clearstreet.io).

- Install dependencies and spin up a kind Kubernetes cluster on the EC2 instance.

- Run tilt up with the supplied arguments.

- Clean up all resources when the branch deploy is no longer needed.

We decided to use Terraform to provision and clean up AWS resources associated with a branch deploy. Once an EC2 instance is created, we use Ansible to connect to the instance and run some setup scripts. Ivy also listens to a webhook from our GitLab instance, and if there’s a push to a branch with a branch deploy we run a git pull on the instance to update its code and trigger rebuilds of services. Branch deploys are also cleaned up automatically if they are stale or if the branch has been merged, although users can opt out of auto termination. With Ivy working as intended, we decided to release it to everyone at Clear Street to use as part of their day-to-day workflow.

Using Ivy

There are two ways users interact with Ivy: a web UI and ChatOps on GitLab. Ivy has an API too so automation, like CI, can take advantage of its capabilities.

Web UI

The Ivy web UI has a dashboard that shows users their active and past branch deploys. From the dashboard, users can easily access a branch deploy as well as perform actions such as updates and terminations.

Ivy web UI user dashboard page

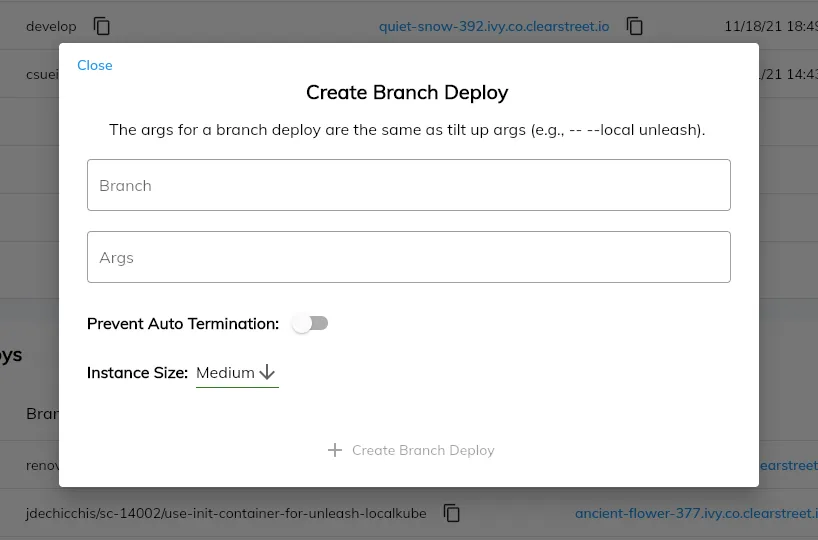

Users can easily create a new branch deploy by using the “+” icon and entering a branch name as well as some Tilt arguments.

Creating a branch deploy in the Ivy web UI

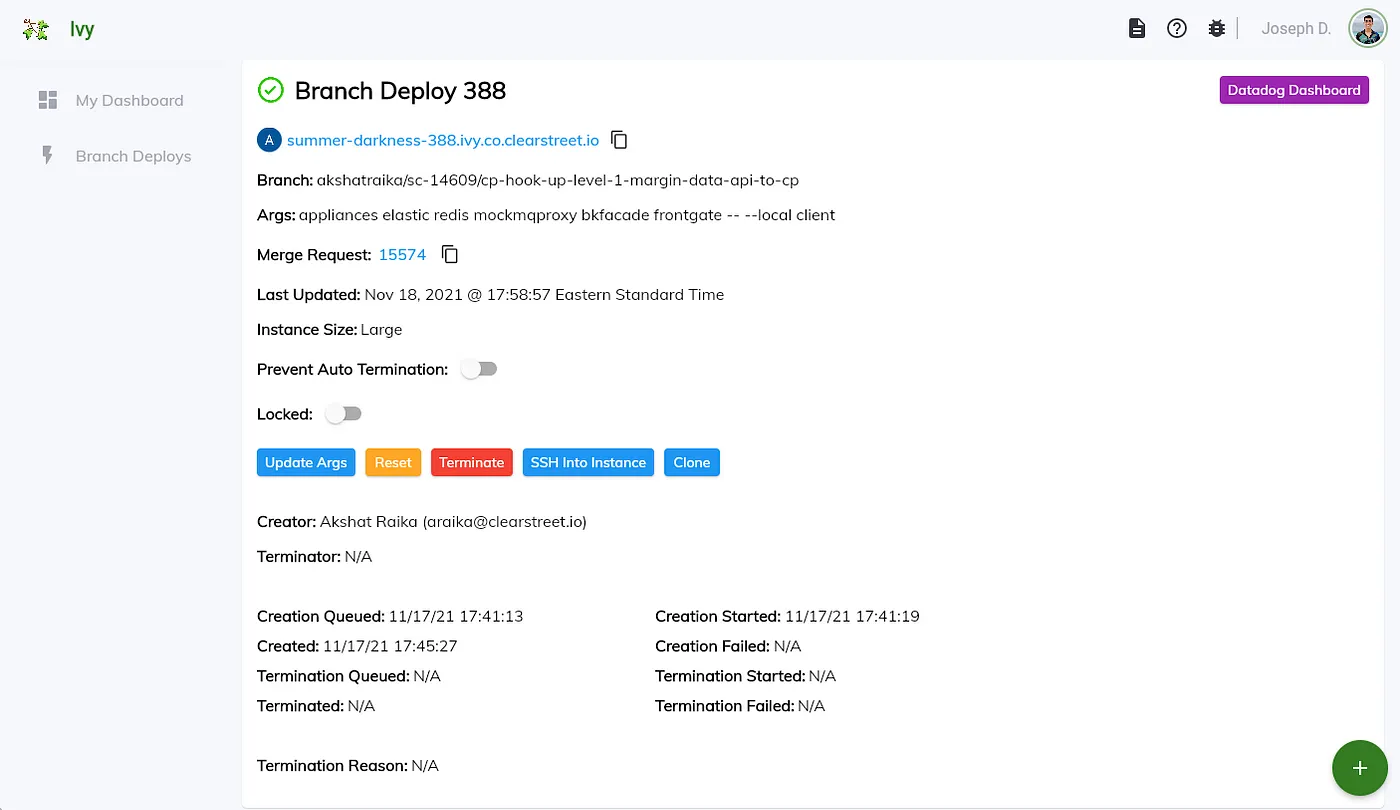

The branch deploy dashboard presents detailed information about a branch deploy. It also links to a DataDog dashboard with metrics related to the EC2 instance such as CPU and memory utilization.

Branch deploy details page

ChatOps on GitLab Merge Requests

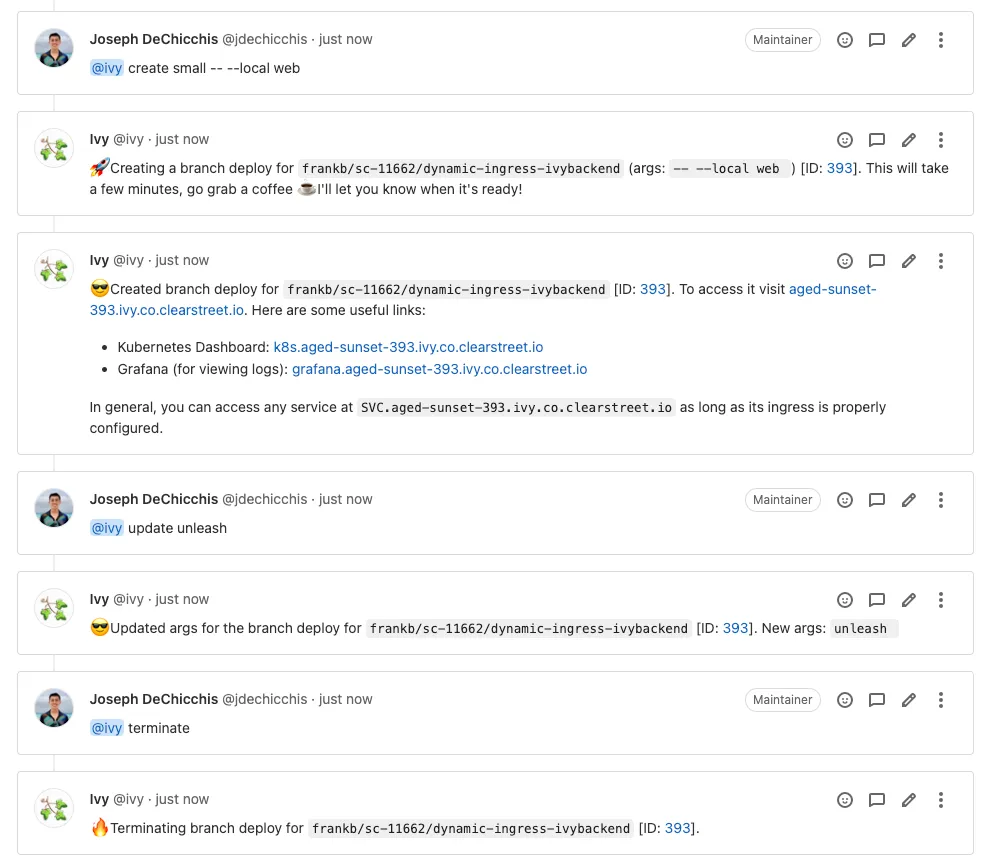

For our developers, one of the most intuitive ways to interact with Ivy is through ChatOps on an open GitLab merge request (i.e., pull request). Ivy has a fairly intuitive chat interface for creating and managing branch deploys so that branch deploys seamlessly integrate with a developer’s natural workflow.

Example chat with Ivy to create, update, and terminate a branch deploy.

Challenges

We faced several challenges while building Ivy, but there are two, in particular, we’d like to highlight.

Networking

It took us several iterations to get networking to work in a way we felt was reliable. By networking, we mean the ability for a user to visit web.cool-meadow-380.ivy.co.clearstreet.io on their browser and access an instance of the website running in a branch deploy. Here’s a diagram that shows the hops a request to a branch deploy needs to take:

The route a request to a branch deploy takes

Traefik Setup

As the above diagram shows, a request to a branch deploy instance first goes through a Traefik proxy. This proxy accepts incoming requests and rewrites the hostname to a format the Ingress Controller in the kind cluster can understand if the request is for something in the kind cluster. Such rewritten requests are handed off to the Ingress Controller which routes it to the appropriate service based on the hostname. This is an example of what a Traefik configuration for a branch deploy may look like:

http:

routers:

tilt:

rule: Host ("cool-meadow-380.ivy.co.clearstreet.io")

service: tilt

tls:

domains:

- main: cool-meadow-380.ivy.co.clearstreet.io

traefik:

rule: Host ("traefik.cool-meadow 380.ivy.co.clearstreet.io")

service: api@internal

tls:

domains:

-main: traefik.cool-meadow-380.ivy.co.clearstreet.io

web:

rule: Host("web.cool-meadow-380. ivy. co. clearstreet.io")

service: web

tlS:

domains:

- main: web.cool-meadow-380.ivy.co.clearstreet.io

services:

tilt:

loadBalancer:

servers:

-url: http://localhost:10350

web:

loadBalancer:

servers:

- url: http://web.localkube.co.clearstreet.io:8085

middlewares:

web:

headers:

customRequestHeaders:

url: web.localkube.co.clearstreet.ioRouting requests to certain services, such as Tilt, which run outside of the kind cluster is straightforward. As the example Traefik shows, we just route such requests to localhost with the appropriate port. However, "handing off requests to the Ingress Controller" to forward requests to the kind cluster is a bit trickier.

Getting Ingresses to Work

Our kind cluster is exposed on localhost:8085. That means that requests to 127.0.0.1:8085 are routed to the Ingress Controller in the kind cluster. Great! We can get networking traffic into the cluster. However, we're still left with one problem. For the Ingress Controller to be able to route traffic, it needs hostname information. To get Ingresses to work in localkube we added a DNS entry to our internal network which points *.localkube.co.clearstreet.io to 127.0.0.1. With that, we can curl web.localkube.co.clearstreet.io:8085 and the request is properly routed to the service in the kind cluster.

Now, all that’s left is pointing incoming requests for services in the kind cluster to the Ingress Controller. As the example Traefik config illustrates, when a request comes into Traefik for web.cool-meadow-380.ivy.co.clearstreet.io the URL header is rewritten to web.localkube.co.clearstreet.io and we forward the request to port 8085. With this setup, a developer or user can type in web.cool-meadow-380.ivy.co.clearstreet.io in their browser and access an instance of the website running in their branch deploy.

Making Ivy Fast

Branch deploy creation initially took 10 to 15 minutes. While this was a great start, we felt it was too slow to make branch deploys a natural part of a user’s workflow. Our goal was to reduce branch deploy creation time to sub 5 minutes. Fast enough that once you’ve kicked off a branch deploy creation it would be done by the time you read a few emails or responded to some Slack messages.

We realized early on that branch deploy creation was mainly taken up by machine provisioning and dependency installation. There wasn’t much we could do to bring down machine creation time. We could have had “hot” machines ready to go but that would increase cost and we didn’t feel initial usage would warrant it, although we may revisit the idea in the future. Instead, we decided to create an AMI which has all of the dependencies pre-installed using a nightly cron job. Using this AMI significantly improved branch deploy creation times. Now branch deploys take only 4 to 5 minutes to start up.

Ivy in Action

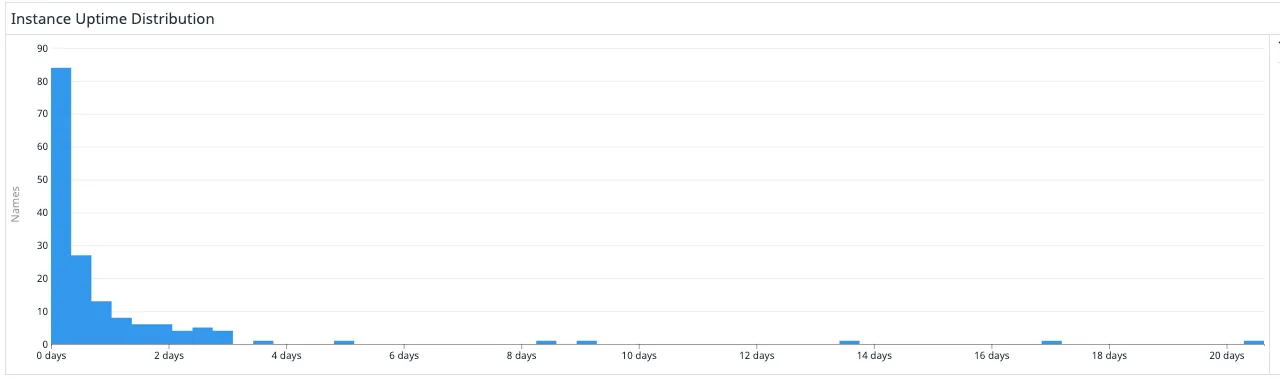

We released Ivy internally in September and have gotten great feedback from engineers as well as other stakeholders, allowing us to quickly iterate on pain points and improve Ivy. Our teams are actively using branch deploys to test frontend changes as well as backend improvements. To date, Ivy has performed around 500 branch deploys, and that number is set to keep growing. As the below graph shows, almost all branch deploys are active for less than two days, indicating that our users are truly taking advantage of branch deploys to quickly test changes, terminate once testing is complete, and spin up new branch deploys for other tests.

Distribution of branch deploy uptime over one month

The Future of Ivy

Ivy has made branch deploys — on-demand, ephemeral, isolated environments — a reality at Clear Street. Since its release, we’ve added features such as custom instance sizes and significantly improved branch deploy creation times. We’re also working on onboarding more technologies such as Snowflake to branch deploys and improving the developer experience by making seeding a branch deploy environment with useful data as easy as clicking a button.

We’ve learned that the ability to create isolated and ephemeral environments is very powerful. We have plans to use Ivy to support robust end-to-end testing of our system and have even thought about using Ivy to build public sandboxes and extend Ivy to create on-demand development environments. We’ve also learned that investing in robust developer tooling is worth the effort and that onboarding one tool can open the door to many possibilities. In Ivy’s case, previous work setting up a local Kubernetes environment and bringing service-level deploys to Clear Street in addition to investing in tools like Terraform is what made branch deploys possible.

Co-authored by:

Joseph DeChicchis & Frank Bagherzadeh

Get in touch with our team

Contact usClear Street does not provide investment, legal, regulatory, tax, or compliance advice. Consult professionals in these fields to address your specific circumstances. These materials are: (i) solely an overview of Clear Street’s products and services; (ii) provided for informational purposes only; and (iii) subject to change without notice or obligation to replace any information contained therein. Products and services are offered by Clear Street LLC as a Broker Dealer member FINRA and SIPC and a Futures Commission Merchant registered with the CFTC and member of NFA. Additional information about Clear Street is available on FINRA BrokerCheck, including its Customer Relationship Summary and NFA BASIC | NFA (futures.org). Copyright © 2024 Clear Street LLC. All rights reserved. Clear Street and the Shield Logo are Registered Trademarks of Clear Street LLC